Adam Kaufman Wiki - Exploring Key Concepts

When folks look up something like "Adam Kaufman wiki," they're often trying to get a quick grasp on a subject, hoping for clear, easy-to-digest facts about a person or a particular topic. It's almost like wanting a friendly guide to show you around a new idea, especially if it feels a bit complicated at first. People just want to get to the heart of things, without a lot of extra fuss, you know?

So, what sorts of things might someone discover when they search for "Adam Kaufman wiki" or similar phrases? Well, it turns out the word "Adam" pops up in a bunch of different places, from the foundational stories we tell about where we come from to the really clever ways we teach computers to learn. It’s pretty interesting how one simple name can connect so many different areas of thought and discovery, in a way.

This means that depending on what someone is really curious about, their search could lead them to all sorts of information. It could be about how computers figure things out, or maybe it’s about ancient tales that shape how we think about life and beginnings. We'll take a look at some of these ideas that are tied to the name "Adam," just to give you a sense of what's out there, you might say.

Table of Contents

- Understanding the Adam Algorithm: What's the Big Deal?

- Adam Algorithm and its Place in Modern Deep Learning

- How Does the Adam Algorithm Work?

- AdamW: What Is It and Why Does It Matter for Adam Kaufman Wiki Searches?

- The BP Algorithm and Its Relation to Adam Kaufman Wiki Topics

- The Story of Adam and Eve: A Classic Narrative

- Lilith: Adam's First Partner and Her Enduring Tale

- Life After Eden: The First Work of Adam and Eve

Understanding the Adam Algorithm: What's the Big Deal?

When you hear about "Adam" in the context of computers learning, people are often talking about a really popular method called the Adam algorithm. This method helps machine learning models, especially the ones that do deep learning, get better at what they do during their training time. It's like a coach helping an athlete improve their performance over and over again, you know?

This particular way of helping computers learn was put forward by D.P. Kingma and J.Ba back in 2014. It’s a pretty smart combination of two helpful ideas: one is called "Momentum," which helps the learning process keep moving in a good direction, and the other is "adaptive learning rates," which means the computer adjusts how quickly it learns based on what it's figuring out. So, it's pretty clever, actually.

People who work with these kinds of systems have noticed something interesting: the Adam algorithm often makes the "training loss" go down faster than another common method called SGD. Training loss is basically how much the computer is messing up during its practice runs. However, there's a catch, or rather, a point of discussion. Sometimes, even if the training loss drops quickly, the "test accuracy" might not be as good as with SGD. Test accuracy is how well the computer performs on new information it hasn't seen before, so that's a pretty important thing to consider, isn't it?

The core idea behind Adam is to make the learning process more efficient. It does this by keeping track of past updates and adjusting the learning speed for each different part of the model. This makes it a pretty effective choice for many modern deep learning tasks, which is why it comes up so often when people look into topics like "adam kaufman wiki" and related technical subjects. It's a fundamental piece of the puzzle, you could say.

Adam Algorithm and its Place in Modern Deep Learning

The Adam algorithm, as we just talked about, has become a pretty standard tool for anyone working with deep learning. It's used a lot because it often helps models learn more quickly and with less fuss than some older methods. Think of it like a popular tool in a builder's kit; it just gets the job done well for many different projects, you know?

Its ability to combine the "momentum" idea, which helps speed up training in the right direction, with "adaptive learning rates," which means it adjusts how big each learning step is, makes it very flexible. This flexibility is a big reason why it's so popular. It can handle a wide range of tasks, from recognizing pictures to understanding human speech, which is pretty neat, isn't it?

Even though it's widely used, there's still a lot of discussion among experts about its finer points. For instance, while it's great at getting training loss down, some folks are always looking for ways to make sure it also performs its very best on new, unseen data. That's where the "test accuracy" part comes in, and it's a constant area of refinement for researchers. It's an ongoing conversation, actually.

So, if you're looking up "adam kaufman wiki" hoping to understand modern machine learning, you'll likely run into the Adam algorithm pretty quickly. It's a foundational piece of how many powerful AI systems learn and improve, making it a key concept in this field. It's a pretty big deal, you might say, in the way these systems get smarter.

How Does the Adam Algorithm Work?

To put it simply, the Adam algorithm works by keeping track of two main things as a computer model learns: the average of the past "gradients" (which tell the model which way to adjust) and the average of the squared past gradients. This might sound a bit technical, but it's really just a clever way for the algorithm to figure out the best path to take, you know?

The first part, the average of the gradients, helps the learning process build up speed in the right direction, kind of like momentum. If the model keeps needing to adjust in a certain way, this part helps it move more confidently in that direction. It's a bit like pushing a swing; each push adds to the momentum, making it go higher, in a way.

The second part, the average of the squared gradients, helps the algorithm adjust how big its steps are for each different piece of information it's learning from. If a certain part of the model needs a lot of adjustment, the algorithm takes bigger steps there. If another part is already pretty good, it takes smaller, more careful steps. This makes the learning process much more efficient, as a matter of fact.

By combining these two ideas, Adam manages to adapt its learning rate for each individual parameter in the model. This is a big advantage over older methods that use a single learning rate for everything. It's why Adam is so effective at training complex deep learning models and why it's a common topic when people explore things like "adam kaufman wiki" for technical details. It's pretty smart, you know?

AdamW: What Is It and Why Does It Matter for Adam Kaufman Wiki Searches?

So, we've talked about the Adam algorithm, which is pretty good, but as with many things in technology, people are always looking for ways to make things even better. This is where something called AdamW comes into the picture. It's basically an improved version of the original Adam, designed to fix a little issue that the first one had, you might say.

The original Adam algorithm had a slight problem with something called "L2 regularization." This is a technique that helps prevent machine learning models from becoming too focused on the training data, which can make them less useful when they see new information. It's like a coach telling an athlete not to just memorize the playbook, but to truly understand the game. However, with Adam, this regularization effect sometimes got a bit weaker, which wasn't ideal, you know?

AdamW was created to solve this specific problem. It makes a small but important change to how L2 regularization is applied, ensuring that it works as it should, even when using the Adam optimization method. This means that models trained with AdamW are often better at generalizing, or performing well on data they haven't seen before, which is a pretty big deal, actually.

For anyone looking into "adam kaufman wiki" for information on optimization methods, understanding AdamW is a good next step after learning about Adam. It shows how the field of machine learning is always refining its tools to get the best possible results, making these powerful systems even more useful in the real world. It's a pretty important refinement, you could say.

The BP Algorithm and Its Relation to Adam Kaufman Wiki Topics

Before Adam and other newer methods became popular, the "BP algorithm" was a really fundamental part of how neural networks learned. BP stands for Backpropagation, and it's basically the way a neural network figures out how to adjust its internal workings after it makes a mistake. It's like telling a student where they went wrong on a test so they can learn for next time, you know?

For a long time, Backpropagation was the go-to method for training neural networks. It helped them adjust their "weights" and "biases" – the internal numbers that determine how the network processes information – so that they could get better at their tasks. It was, in some respects, the backbone of early neural network training, you might say.

However, as deep learning models grew much larger and more complex, people started looking for more efficient ways to train them. While BP is still the core idea behind how gradients are calculated, the *optimizers* like Adam and RMSprop came along to make the *process* of using those gradients much faster and more stable. So, while BP tells you *how* to change, Adam tells you *how much* and *in what way* to change, which is a pretty important distinction, actually.

So, if you're exploring topics related to "adam kaufman wiki" and deep learning, you'll probably encounter discussions about the differences between BP and modern optimizers. It's a good way to see how the field has grown and found better ways to train these powerful computer brains, which is pretty fascinating, isn't it?

The Story of Adam and Eve: A Classic Narrative

Beyond the world of computer learning, the name "Adam" also brings to mind one of the oldest and most widely known stories in human history: the tale of Adam and Eve. This narrative, found in the Book of Genesis, speaks about the very beginnings of humanity and our place in the world. It’s a pretty foundational story for many cultures, you know?

The story tells us that a divine being formed Adam from the dust of the earth. Then, to create a companion for Adam, Eve was brought into existence from one of Adam's ribs. This part of the story, about the rib, has sparked a lot of discussion and interpretation over time. For example, some scholars, like biblical expert Ziony Zevit, have offered different ideas about what "rib" might have really meant in the original text, which is pretty interesting, actually.

This narrative also touches upon big questions, such as the origin of sin and death in the world. It tells of the first "sinner" and the consequences that followed, leading to a change in the way humans lived. The wisdom of Solomon, for instance, is one text that expresses views on human nature and divine justice that resonate with themes from this story. It's a pretty deep tale, you might say.

So, whether you're searching for "adam kaufman wiki" to learn about technology or to explore ancient texts, the story of Adam and Eve remains a powerful and thought-provoking piece of our shared human story. It continues to be discussed and interpreted, offering insights into human nature and the world around us, which is pretty cool, isn't it?

Lilith: Adam's First Partner and Her Enduring Tale

Interestingly, when people talk about the story of Adam, sometimes another figure comes up: Lilith. While not in the mainstream biblical account, Lilith appears in various ancient texts and folklore as, arguably, Adam's very first partner, created at the same time and in the same way as him, which is a bit different from Eve's story, you know?

In many of the tales about her, Lilith is shown as a figure of chaos, of temptation, and sometimes even as ungodly. She refused to be subservient to Adam, wanted to be his equal, and eventually left the Garden of Eden. This act of defiance made her a powerful and often terrifying force in the stories. It's a pretty strong character, actually.

Despite her portrayal as a "demoness" in some stories, Lilith has, in her every guise, cast a spell on humankind, inspiring countless works of art, literature, and discussion. Her story explores themes of independence, rebellion, and the struggle for equality, which are pretty timeless ideas, aren't they?

So, when you look into the broader narratives surrounding Adam, you might just find yourself learning about Lilith too. Her story adds another layer of complexity and depth to the ancient tales of creation and human relationships, making it a pretty rich area to explore, you might say, especially if you're looking for more than just the basics on something like "adam kaufman wiki."

Life After Eden: The First Work of Adam and Eve

The story of Adam and Eve doesn't end with their time in the Garden of Eden. After their expulsion, the narrative shifts to a different kind of existence, one where life involved effort and labor. This marks a big change from the "sweat-free paradise" they once knew, you know?

The concept of Adam and Eve "farming" away from the easy life of Eden speaks to the beginning of human toil and the need to work the land for sustenance. It's a portrayal of the origins of agriculture and the challenges that came with it. This shift from effortless abundance to necessary labor is a pretty significant part of the narrative, actually.

This period of their lives, focusing on work and survival outside the garden, has been a source of inspiration for many artists. For instance, the artwork "First Work of Adam and Eve" by Alonso Cano captures this very idea, showing them engaged in the new realities of their existence. It's a visual representation of a major turning point in their story, you might say.

So, whether you're interested in the technical aspects of an "Adam Kaufman wiki" search or the historical and mythical accounts, the story of Adam and Eve's life after Eden reminds us of enduring themes like work, consequence, and the human condition. It's a pretty powerful image, isn't it, of humanity's early struggles and adaptations?

Kaufman Chamber of Commerce | Kaufman TX

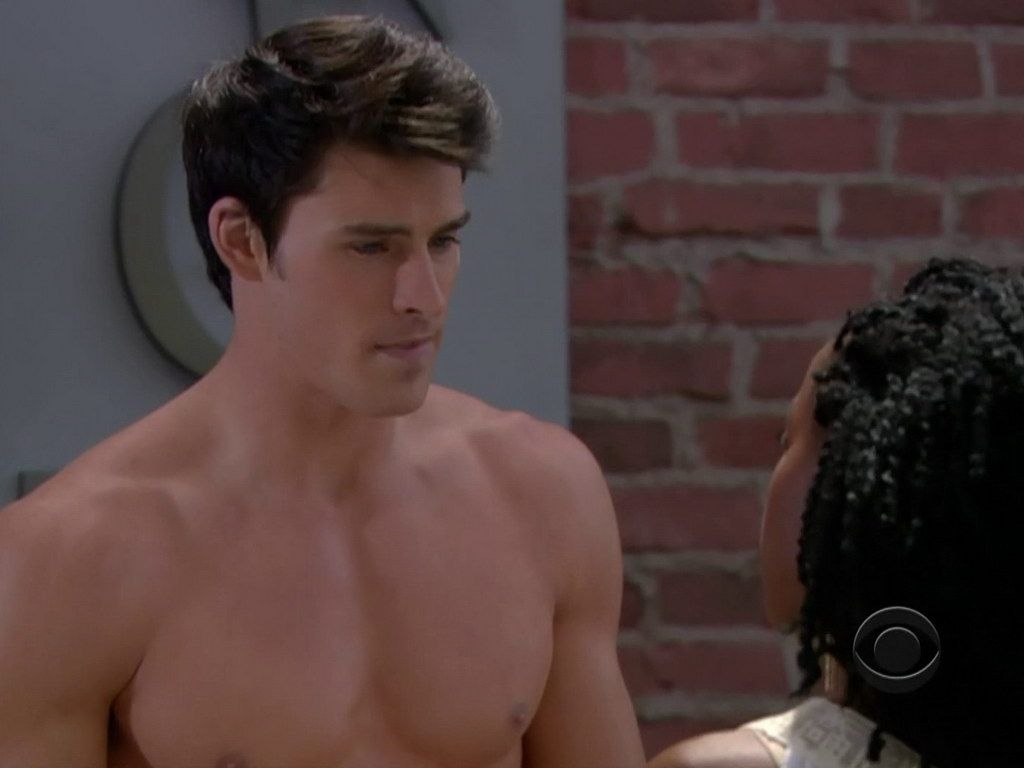

Adam Kaufman

Pictures of Adam Kaufman